Introduction

I decided to analyze an article describing some interesting details of the stream processing exactly-once. The thing is that sometimes the authors misunderstand important terms. The analysis of the article just will clarify many aspects and details so revealing illogicalities and oddities allows you to fully experience the concepts and meaning.

Let’s get started.

Analysis

Everything starts very well:

Distributed event stream processing has become an increasingly hot topic in the area of Big Data. Notable Stream Processing Engines (SPEs) include Apache Storm, Apache Flink, Heron, Apache Kafka (Kafka Streams), and Apache Spark (Spark Streaming). One of the most notable and widely discussed features of SPEs is their processing semantics, with “exactly-once” being one of the most sought after and many SPEs claiming to provide “exactly-once” processing semantics.

Meaning that data processing is extremely important bla-bla-bla and the topic under discussion is exactly-once processing. Let us discuss it.

There exists a lot of misunderstanding and ambiguity, however, surrounding what exactly “exactly-once” is, what it entails, and what it really means when individual SPEs claim to provide it.

Indeed, it is very important to understand what it is. To do this, it would be nice to give a correct definition before the lengthy reasoning. And who am I to give such damn sensible advice?

I’ll discuss how “exactly-once” processing semantics differ across many popular SPEs and why “exactly-once” can be better described as effectively-once

Inventing new terms is certainly important task. I love this business. But this requires a justification and strong reasoning. Let us try to find it.

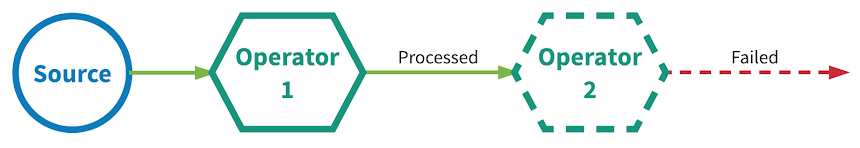

I will not describe the obvious things by the type of directed processing graphs and stuff. Readers can read the original article on their own. Moreover, for analysis these details are not very significant. I will give only a picture:

Further, a description of the semantics follows:

- At-most-once, i.e. not more than once. At some complex cases such behavior is extremely difficult to guarantee for specific failure scenarios, network split, and more. But for the author provides simple solution:

- At-least-once, i.e. not less than once. The scheme is more complicated:

- Exactly-once, finally. What exactly is once?

Events are guaranteed to be processed “exactly once” by all operators in the stream application, even in the event of various failures.

So the guarantee of processing exactly-once is when the processing “exactly once” occurred.

Can you feel the power of the definition? I rephrase: processing happens once when processing occurs “once”. Well, yes, the exactly once processing must be there even in case of failures. But for distributed systems this thing is obvious. And the quotes hint at the fact that something is wrong here. Giving the definitions with quotes without explaining what it means - it is a mark of a deep and thoughtful approach.

Next part describes the ways of implementing such semantics. And here I would like to specify it in more detail.

Two popular mechanisms are typically used to achieve “exactly-once” processing semantics:

- Distributed snapshot/state checkpointing

- At-least-once event delivery plus message deduplication

If the first mechanism for snapshots and checkpoints does not cause questions, well, except for some details of the type of efficiency. Then with the second there are small problems, which the author has left out.

For some reason, it is implied that the handler can only be deterministic. In the case of a nondeterministic handler, each subsequent restarting provides in general other output values and states, so deduplication will not work, because the output values will be different. Thus, the general mechanism will be much more complicated than described in the article. Or, to put it bluntly, such a mechanism is incorrect.

However, we pass to the most delicious:

Is exactly-once really exactly-once?

Now let’s reexamine what the “exactly-once” processing semantics really guarantees to the end user. The label “exactly-once” is misleading in describing what is done exactly once.

It is said that it is time to revise this concept, because there are some inconsistencies. Okay.

Some might think that “exactly-once” describes the guarantee to event processing in which each event in the stream is processed only once. In reality, there is no SPE that can guarantee exactly-once processing. To guarantee that the user-defined logic in each operator only executes once per event is impossible in the face of arbitrary failures, because partial execution of user code is an ever-present possibility.

The dear author should be reminded how modern CPUs work. Each CPU performs a large number of parallel steps during processing. Moreover, there are branch predictors at which the processor starts to perform the wrong actions if the predictor is mistaken. In this case, the actions and side effects are rolled back. Thus, the same piece of code can be suddenly executed twice even if no failures have occurred!

The attentive reader will immediately exclaim: so in fact the real output is important, and not how it is executed. Precisely! What is important is what happened as a result, and not how it actually happened. If the result is as if it happened exactly once, so it happened exactly once. Do not you find it? And everything else is rubbish that are not relevant. Systems are complex, and the resulting abstractions create only the illusion of doing it in a certain way. It seems to us that the code is executed sequentially, the instruction for the instruction, that first goes reading, then writing, then a new instruction. But this is not so, everything is much more complicated. And the essence of correct abstractions is to maintain the illusion of simple and understandable guarantees, without digging inwards every time you need to assign values to a variable.

And simply speaking, the main issue of this article is that exactly-once is an abstraction that allows you to build applications without thinking about duplicates and lost of the values. That everything will be fine even in case of a failure. And there is no need to invent new terms for this.

An example of the same code in the article clearly demonstrates the lack of understanding of how to write handlers:

Map (Event event) {

Print "Event ID: " + event.getId()

Return event

}

The reader is invited to rewrite the code himself, so as not to repeat the mistakes by the author.

So what does SPEs guarantee when they claim “exactly-once” processing semantics? If user logic cannot be guaranteed to be executed exactly once then what is executed exactly once? When SPEs claim “exactly-once” processing semantics, what they’re actually saying is that they can guarantee that updates to state managed by the SPE are committed only once to a durable backend store.

The user does not need a guarantee of physical execution of the code. Knowing how the CPU works, it’s easy to conclude that this is impossible. The main goal is the logical exactly-once execution as if there were no failures at all. Additional involving the notions of “commit to a durable store” only exacerbates the lack of understanding of the basic things because there are implementations of such semantics without the need for a commit.

More information can be easily found in my article: Heterogeneous Concurrent Exactly-Once Real-Time Processing.

In other words, the processing of an event can happen more than once but the effect of that processing is only reflected once in the durable backend state store.

User doesn’t care that there is a “durable backend state store” at all. Only the effect of execution is important, i.e. the consistency and the result of the entire processing execution matters. It is worth noting that for some tasks there is no need to have a durable backend state store, and having the exactly-once guarantee would be required.

Here at Streamlio, we’ve decided that effectively-once is the best term for describing these processing semantics.

A typical example of the stupid introduction of concepts: we will write some example and lengthy arguments on a whole paragraph, and in the end we will add that “we define this concept in this way”. The accuracy and clarity of the definitions causes a really bright and emotional response.

Conclusions

Misunderstanding of the essence of abstractions leads to a distortion of the original meaning of existing concepts and the subsequent invention of new terms from scratch.

[1] Exactly once is NOT exactly the same.

[2] Heterogeneous Concurrent Exactly-Once Real-Time Processing.

No comments :

Post a Comment